Posted by randfish

It"s tough to admit it, but many of us still practice outdated SEO tactics in the belief that they still have a great deal of positive influence. In this week"s Whiteboard Friday, Rand gently sets us straight and offers up a series of replacement activities that will go much farther toward moving the needle. Share your own tips and favorites in the comments!

Click on the whiteboard image above to open a high-resolution version in a new tab!

Video Transcription

Howdy, Moz fans, and welcome to another edition of Whiteboard Friday. This week we"re going to go back in time to the prehistoric era and talk about a bunch of "dinosaur" tactics, things that SEOs still do, many of us still do, and we probably shouldn"t.

We need to replace and retire a lot of these tactics. So I"ve got five tactics, but there"s a lot more, and in fact I"d loved to hear from some of you on some of yours.

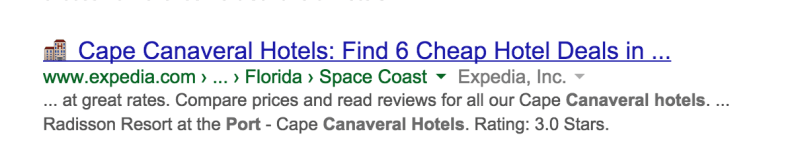

Dino Tactic #1: AdWords/Keyword Planner-based keyword research

But the first one we"ll start with is something we"ve talked about a few times here — AdWords and Keyword Planner-based keyword research. So you know there"s a bunch of problems with the metrics in there, but I still see a lot of folks starting their keyword research there and then expanding into other tools.

Replace it with clickstream data-driven tools with Difficulty and CTR %

My suggestion would be start with a broader set if you possibly can. If you have the budget, replace this with something that is driven by clickstream data, like Ahrefs or SEMrush or Keyword Explorer. Even Google Search Suggest and related searches plus Google Trends tend to be better at capturing more of this.

Why it doesn"t work

I think is just because AdWords hides so many keywords that they don"t think are commercially relevant. It"s too inaccurate, especially the volume data. If you"re actually creating an AdWords campaign, the volume data gets slightly better in terms of its granularity, but we found it is still highly inaccurate as compared as to when you actually run that campaign.

It"s too imprecise, and it lacks a bunch of critical metrics, including difficulty and click-through rate percentage, which you"ve got to know in order to prioritize keywords effectively.

Dino Tactic #2: Subdomains and separate domains for SERP domination

Next up, subdomains and separate domains for SERP domination. So classically, if you wanted to own the first page of Google search results for a branded query or an unbranded query, maybe you just want to try and totally dominate, it used to be the case that one of the ways to do this was to add in a bunch of subdomains to your website or register some separate domains so that you"d be able to control that top 10.

Why it doesn"t work

What has happened recently, though, is that Google has started giving priority to multiple subpages in a single SERP from a single domain. You can see this for example with Yelp on virtually any restaurant-related searches, or with LinkedIn on a lot of business topic and job-related searches.

You can see it with Quora on a bunch of question style searches, where they"ll come up for all of them, or Stack Overflow, where they come up for a lot of engineering and development-related questions.

Replace it with barnacle SEO and subfolder hosted content

So one of the better ways to do this nowadays is with barnacle SEO and subfolder hosted content, meaning you don"t have to put your content on a separate subdomain in order to rank multiple times in the same SERP.

Barnacle SEO also super handy because Google is giving a lot of benefit to some of these websites that host content you can create or generate and profiles you can create and generate. That"s a really good way to go. This is mostly just because of this shift from the subdomains being the way to get into SERPs multiple times to individual pages being that path.

Dino Tactic #3: Prioritizing number one rankings over other traffic-driving SEO techniques

Third, prioritizing number one rankings over other traffic-driving SEO techniques. This is probably one of the most common "dinosaur" tactics I see, where a lot of folks who are familiar with the SEO world from maybe having used consultants or agencies or brought it in-house 10, 15, 20 years ago are still obsessed with that number one organic ranking over everything else.

Replace it with SERP feature SEO (especially featured snippets) and long-tail targeting

In fact, that"s often a pretty poor ROI investment compared to things like SERP features, especially the featured snippet, which is getting more and more popular. It"s used in voice search. It oftentimes doesn"t need to come from the number one ranking result in the SERP. It can come number three, number four, or number seven.

It can even be the result that brings back the featured snippet at the very top. Its click-through rate is often higher than number one, meaning SERP features a big way to go. This is not the only one, too. Image SEO, doing local SEO when the local pack appears, doing news SEO, potentially having a Twitter profile that can rank in those results when Google shows tweets.

And, of course, long-tail targeting, meaning going after other keywords that are not as competitive, where you don"t need to compete against as many folks in order to get that number one ranking spot, and often, in aggregate, long tail can be more than ranking number one for that "money" keyword, that primary keyword that you"re going after.

Why it doesn"t work

Why is this happening? Well, it"s because SERP features are biasing the click-through rate such that number one just isn"t worth what it used to be, and the long tail is often just higher ROI per hour spent.

Dino Tactic #4: Moving up rankings with link building alone

Fourth, moving up the rankings on link building alone. Again, I see a lot of people do this, where they"re ranking number 5, number 10, number 20, and they think, "Okay, I"m ranking in the first couple of pages of Google. My next step is link build my way to the top."

Why it no longer works on its own

Granted, historically, back in the dinosaur era, dinosaur era of being 2011, this totally worked. This was "the" path to get higher rankings. Once you were sort of in the consideration set, links would get you most of the way up to the top. But today, not the case.

Replace it with searcher task accomplishment, UX optimization, content upgrades, and brand growth

Instead I"m going to suggest you retire that and replace it with searcher task accomplishment, which we"ve seen a bunch of people invest in optimization there and springboard their site, even with worse links, not as high DA, all of that kind of stuff. UX optimization, getting the user experience down and nailing the format of the content so that it better serves searchers.

Content upgrades, improving the actual content on the page, and brand growth, associating your brand more with the topic or the keyword. Why is this happening? Well, because links alone it feels like today are just not enough. They"re still a powerful ranking factor. We can"t ignore them entirely certainly.

But if you want to unseat higher ranked pages, these types of investments are often much easier to make and more fruitful.

Dino Tactic #5: Obsessing about keyword placement in certain tags/areas

All right, number five. Last but not least, obsessing about keyword placement in certain tags and certain areas. For example, spending inordinate amounts of time and energy making sure that the H1 and H2, the headline tags, can contain keywords, making sure that the URL contains the keywords in exactly the format that you want with the hyphens, repeating text a certain number of times in the content, making sure that headlines and titles are structured in certain ways.

Why it (kind of) doesn"t work

It"s not that this doesn"t work. Certainly there"s a bare minimum. We"ve got to have our keyword used in the title. We definitely want it in the headline. If that"s not in an H1 tag, I think we can live with that. I think that"s absolutely fine. Instead I would urge you to move some of that same obsession that you had with perfecting those tags, getting the last 0.01% of value out of those into related keywords and related topics, making sure that the body content uses and explains the subjects, the topics, the words and phrases that Google knows searchers associate with a given topic.

My favorite example of this is if you"re trying to rank for "New York neighborhoods" and you have a page that doesn"t include the word Brooklyn or Manhattan or Bronx or Queens or Staten Island, your chances of ranking are much, much worse, and you can get all the links and the perfect keyword targeting in your H1, all of that stuff, but if you are not using those neighborhood terms that Google clearly can associate with the topic, with the searcher"s query, you"re probably not going to rank.

Replace it with obsessing over related keywords and topics

This is true no matter what you"re trying to rank for. I don"t care if it"s blue shoes or men"s watches or B2B SaaS products. Google cares a lot more about whether the content solves the searcher"s query. Related topics, related keywords are often correlated with big rankings improvements when we see folks undertake them.

I was talking to an SEO a few weeks ago who did this. They just audited across their site, found the 5 to 10 terms that they felt they were missing from the content, added those into the content intelligently, adding them to the content in such a way that they were actually descriptive and useful, and then they saw rankings shoot up with nothing else, no other work. Really, really impressive stuff.

So take some of these dino tactics, try retiring them and replacing them with some of these modern ones, and see if your results don"t come out better too. Look forward to your thoughts on other dino tactics in the comments. We"ll see you again next week for another edition of Whiteboard Friday. Take care.

Video transcription by Speechpad.com

Sign up for The Moz Top 10, a semimonthly mailer updating you on the top ten hottest pieces of SEO news, tips, and rad links uncovered by the Moz team. Think of it as your exclusive digest of stuff you don"t have time to hunt down but want to read!

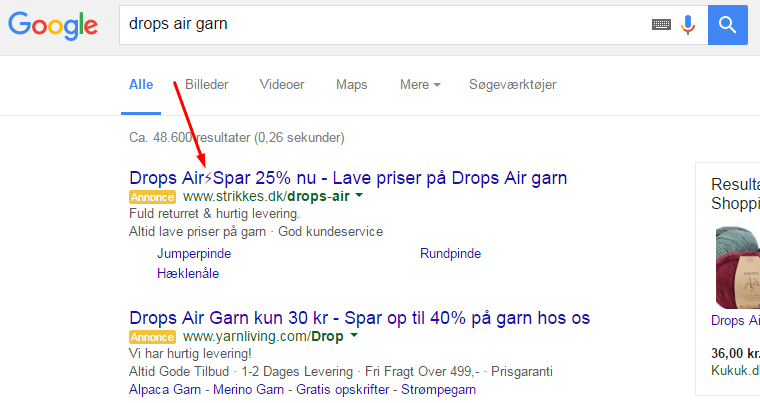

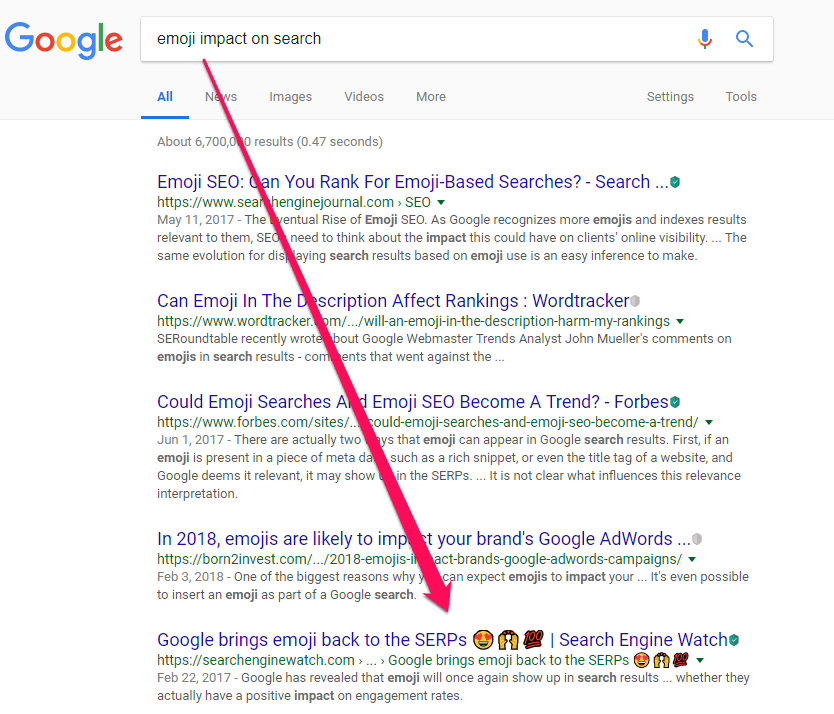

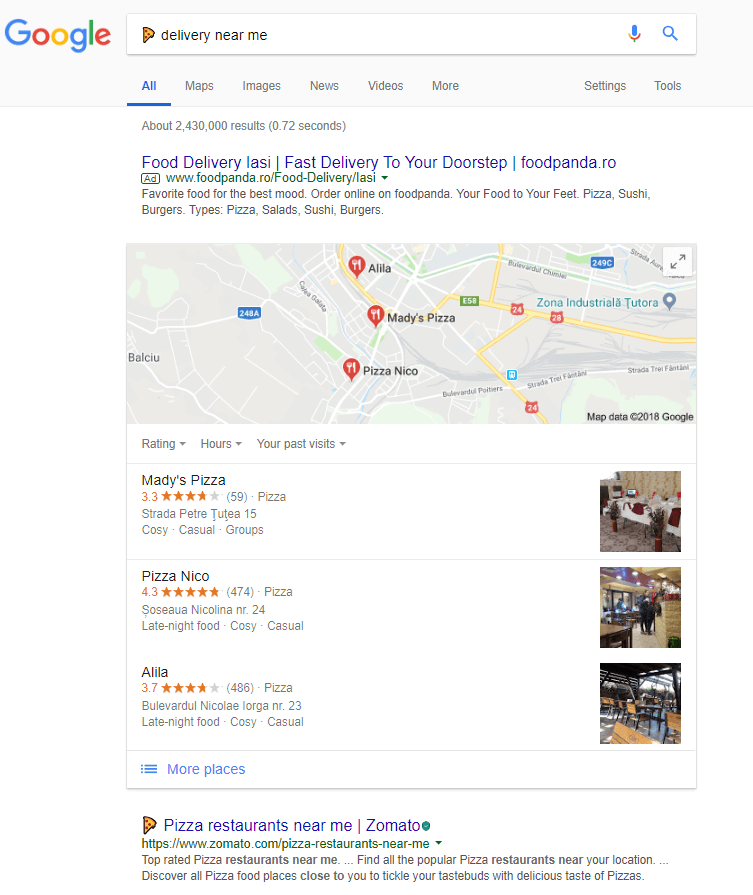

delivery near me” or “

delivery near me” or “

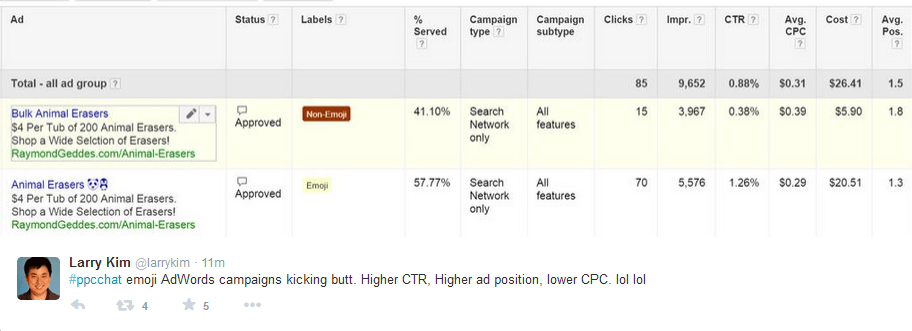

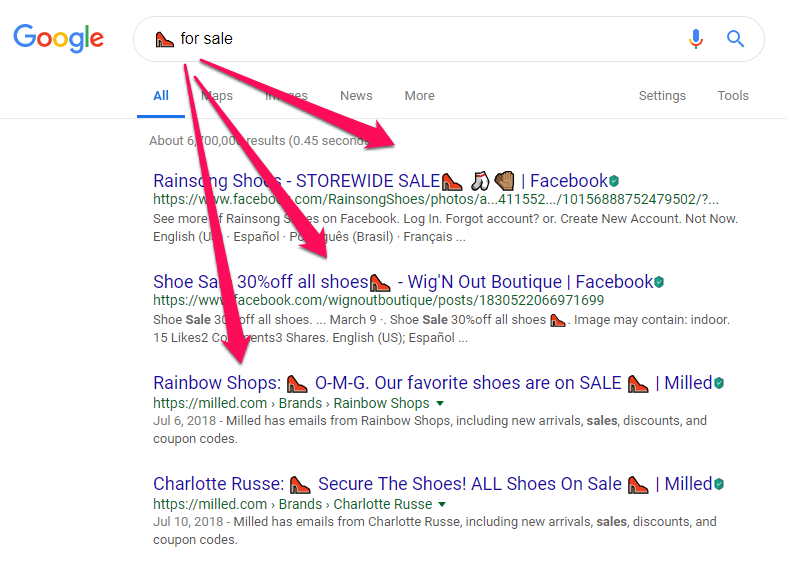

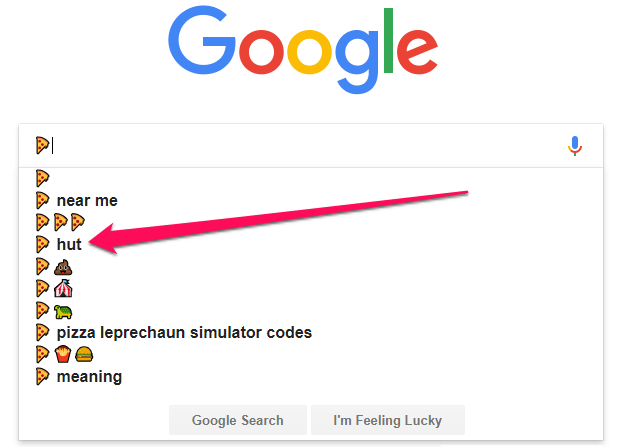

l trailer” (Deadpool trailer) – which was actually used in a billboard to promote the movie – or Google search for specific actors ( Kristen

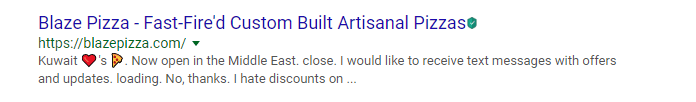

l trailer” (Deadpool trailer) – which was actually used in a billboard to promote the movie – or Google search for specific actors ( Kristen  , Kevin 🥓 and so on) you can get accurate results just as if you’d searched using text.

, Kevin 🥓 and so on) you can get accurate results just as if you’d searched using text.